Uniform developer experience using Visual Studio Code Dev Containers

Local development environments may be simultaneously the best and worst part of a developer day-to-day life.

It’s frustrating when you onboard a project just to discover that there is absolutely no guidance about how to setup your machine to actually start working on it. And it’s equally frustrating when the documentation exists but either it’s not actively maintained, obsolete or maybe just too specific to someone else’s preferences, leaving you to troubleshoot any issues you’ll encounter while trying to bring everything up.

That’s even before everyone’s environment starts diverging or even “rot”. That’s a completely different kettle of fish.

For this reason, containerization technologies have increasingly become a staple of local environments. Instead of installing a database engine on your machine to share across different codebases, you can spin up a container just for the one you’re working on.

Need different versions? Just update the image. Oh, we’re switching to Postgres? Just pull the new one. You completely busted something and want to start from a clean state?

Just create a new one. What would have been an entire day lost reinstalling your machine, is now a sub-30 seconds command.

Downsides

Containers are useful but not perfect. Unless you plan to work entirely in vim inside the container (or emacs, for people who prefer it), you’re still going to need an editor that is installed locally on your machine and some way to share code and other data between the host machine for your editor to consume and the container.

This is, to put it lightly, less than optimal in many cases, file sharing in Docker for macOS and Windows is notoriously slow. There are ways to alleviate it but you can’t completely work around it, and it’s also something that’s going to aggravate the setup steps outlined in your docs, ultimately making it longer, more error-prone and difficult to maintain.

You will also need other tooling outside of your container, to some degree, like linters, static analyzers and other things that are going to work within your editor or IDE to give you the modern developer experience you’d expect today.

And this is where Visual Studio Code’s Remote Development extensions come into the picture.

Remote Development extensions

This is a set of extensions for the editor that let you work with codebases that are not on your local machine, but rather on different machines.

It might not seem to be something particularly new, tons of editors in the past have supported something like that, but they often worked by passing files back and forth between the local and remote machines using tecnologies like FTP or (hopefully) SFTP.

Visual Studio Code goes one step further: it connects to the machine, downloads and runs a headless version of the editor and then connects to it.

I also like how it treats other installed extensions: some of them are installed locally, like themes, others like language servers are installed remotely, and then there are API that extensions can leverage to work across both environments.

There are three extensions in the pack, for three different technologies:

-

One lets you connect to other machines via SSH (and even supports ARMv7 and Arch64, which means working with headless SBCs like the Raspberry Pi is a breeze).

-

Another one is for WSL (Windows Subsystem for Linux), and basically does what it says. This is particularly nice on Windows because it means you can keep the source code on the Linux side of WSL, avoiding the cross-VM file sharing performance hit we talked about earlier. And the UI itself renders natively on the Windows side, avoiding all the issues you would have got by installing (a separate copy of) Visual Studio Code on Linux and then using a Windows X11 server like VcXsrv or X410 to have it running on screen. A nice touch is that installing VSCode in Windows automatically adds a

codecommand in WSL, which will let you run VSCode-on-WSL from a WSL shell like you would do in native Linux. It’s one of the little things that makes the experience less jarring than it otherwise would have been. -

But the main star of the show is the Docker-specific extension. Like the other two, it’s pretty straightforward: it spins up a container, copies the headless server into it, runs it and connects to it. The best part is that everything required to know including what image to use, what ports to expose, which environment variables to set etc is all defined in a JSON file, which can then be checked in your source control repository.

Long story short, the documentation for your local environment would then boil down to:

- Install Visual Studio Code

- Install the Remote Development Extensions Pack

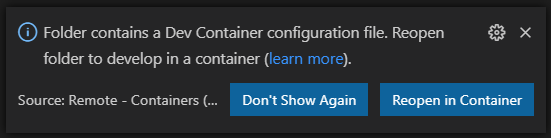

Then, when you open a workspace that has been setup you’ll get a prompt like this:

Click “Reopen in Container” and a couple of minutes later you’ll be off to the races.

That’s just for the repo, how about the rest?

Setting up a local development environment is of course more than that, but since reading about something can be very boring (and I know I am), maybe it’s better to show you how to actually use it.

For this example, we’ll start with a .NET 5.0 web application. Nothing fancy, it’s just an app that exposes some REST API, a Swagger UI frontend, uses Postgres as its main database, a couple of S3 buckets and Redis for caching.

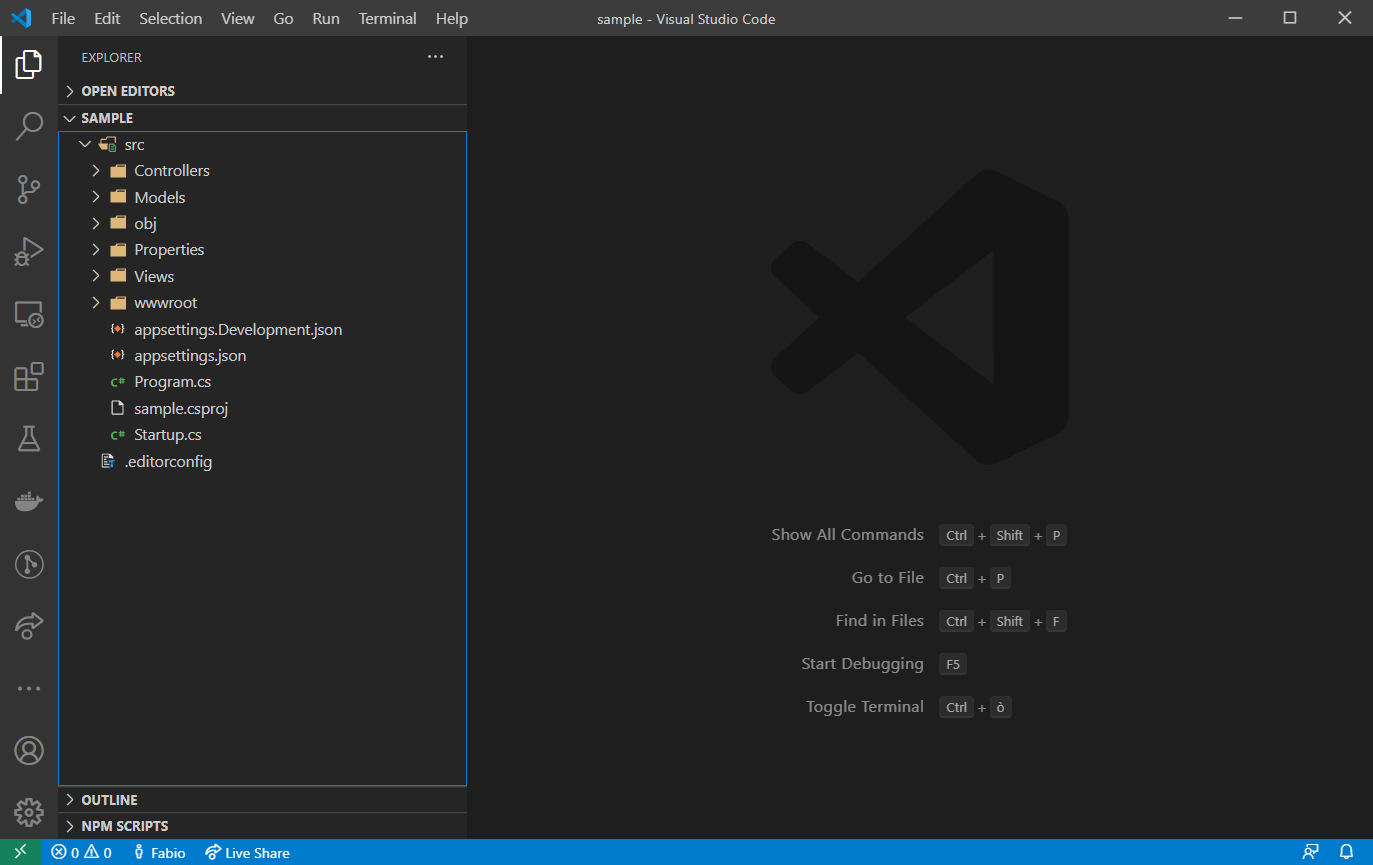

This is our starting point. We won’t go into the actual app code (also because

I’m cheating and the code is just what comes out of scaffolding) but notice

we also have an .editorconfig file there to define a styleguide.

Normally this would require the developer to manually install the EditorConfig extensions for this to be actually useful, but just keep this in the back of your mind for now.

Noticed the green button in the lower left corner of the window? That’s the sign at least one of the Remote Development extensions is installed.

The first step will be to create the JSON with all the container settings.

We have two options: it can either be in the root (named .devcontainer.json)

or inside a separate directory (.devcontainer/devcontainer.json in this case).

We’ll go for the separate directory route and place this inside the file:

{

"image": "mcr.microsoft.com/dotnet/sdk:5.0-alpine"

}

Just the image name for now. Now invoke the Command palette (F1 or Ctrl/Cmd + Shift + P) and select “Remote-Containers: Reopen in container” and wait (you can also find this command clicking on the button in the lower left corner).

A couple of seconds or minutes later, we’ll be greeted by a similar-looking window, the only noticeable difference will be the “Dev Container” string inside the lower left corner button.

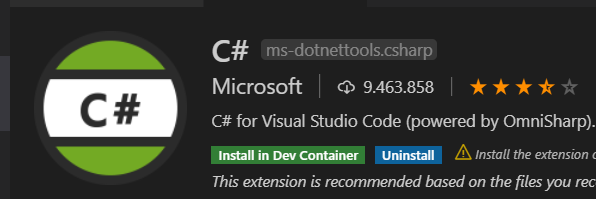

But opening any of the .cs file will make this notification appear:

That’s because the headless instance has no extensions installed, you can also notice it from the left sidebar, which has a lot less icons compared to the previous screenshot.

Of course, we could install it by clicking on the button and call it a day, but we would be required to do so every time we rebuild the container.

Kind of defeats the purpose of making things simpler, doesn’t it?

Luckily, the JSON files comes to help once again: we can list there the extensions we want to use, and it will automatically install them at build time. Since we’re using EditorConfig, we can also add its extension there too!

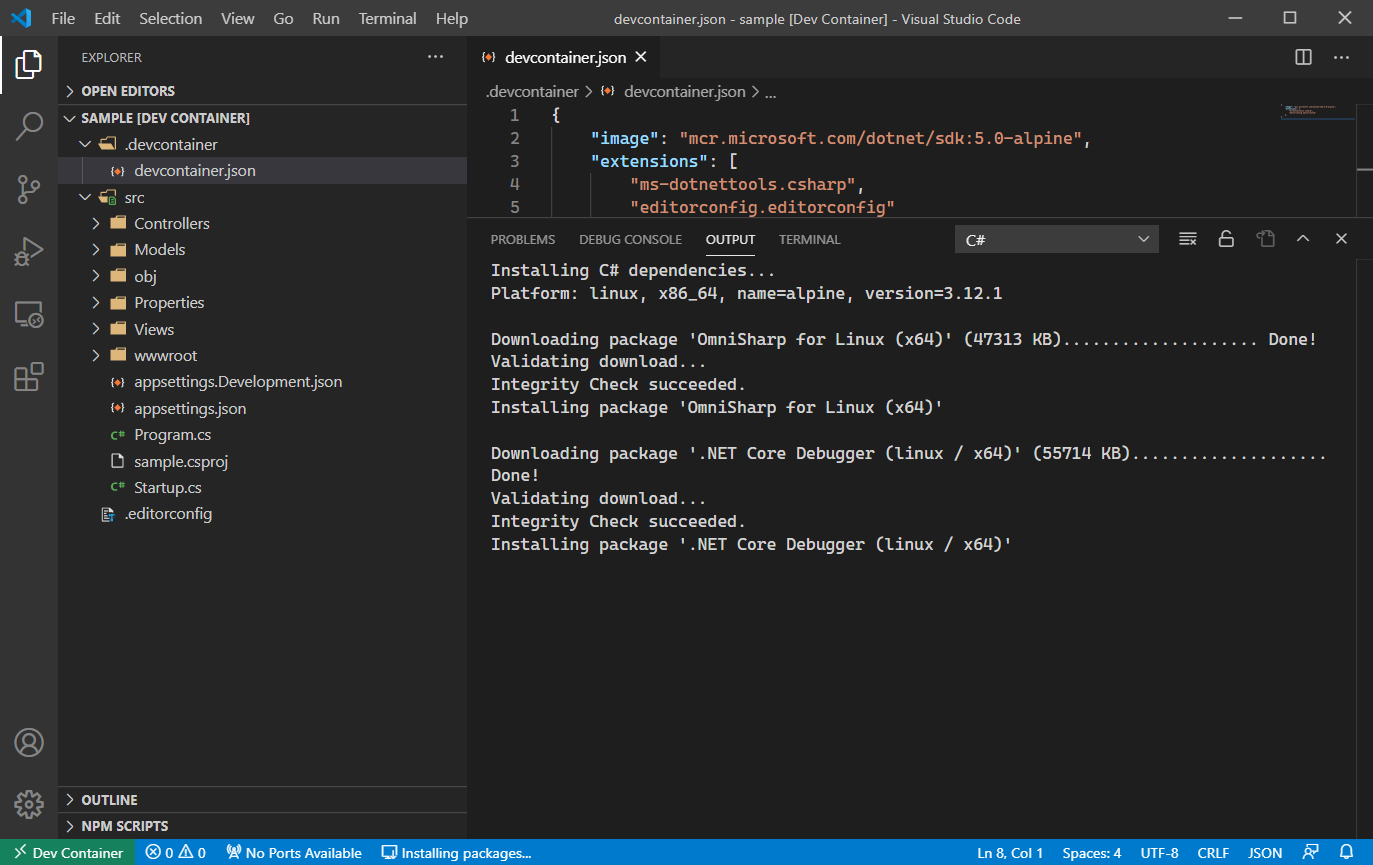

The JSON files now becomes like this:

{

"image": "mcr.microsoft.com/dotnet/sdk:5.0-alpine",

"extensions": [

"ms-dotnettools.csharp",

"editorconfig.editorconfig"

]

}

You can add any extension there, and you can find the IDs for each one from its details page in the Extensions section:

After invoking the “Rebuild container” command from the Palette and waiting a couple of minutes we’ll see this:

Notice how the C# Extension is installing its dependencies. That’s because the main extension was already preinstalled during bring-up and we’re greeted by its first-launch dependency installation. Also notice that we’re installing the Linux dependencies even if this screenshot was taken on Windows.

Dockerfiles

But we’re not done here! Sometimes it might be enough to start from an image (either a public one or some developer image that’s maintained internally), but it also might be useful to personalize this image.

Of course the extension supports this too, we just need to define a Dockerfile

inside of our .devcontainer directory and change our JSON to use it instead of

the prebuilt image.

Let’s suppose we want to install the dotnet-format tool, that automatically

checks and formats your code according to the EditorConfig rules:

What we need is a Dockerfile like this:

FROM mcr.microsoft.com/dotnet/sdk:5.0-alpine

# This is needed for Global Tools to work

ENV PATH="${PATH}:/root/.dotnet/tools"

# Install .NET Global Tools

RUN dotnet tool install -g dotnet-format

and also to change our JSON like this (I also threw in the Docker extensions):

{

"dockerFile": "Dockerfile",

"extensions": [

"ms-dotnettools.csharp",

"editorconfig.editorconfig",

"ms-azuretools.vscode-docker"

]

}

And now we can:

- Edit the source code, with all the bells and whistles like IntelliSende, code navigation, smart refactorings etc.

- EditorConfig integration in the editor

- Use the CLI to autoformat the code according to the styleguide

Of course this can be used to install anything you might need. The JSON also offers some fields where you can define commands to be run at certain stages, like before/after building the container, after starting it etc.

For example, you could use postCreateCommand to run a script that configures

LocalStack using the same templates you would use

to define your infrastructure on the real AWS, using

awscli-local.

In our example, we would use it to create (and maybe seed) the S3 buckets the

app uses, the database etc.

Talking about LocalStack, S3 buckets and databases, our sample app uses all of them. Are we supposed to install everything inside the container?

Of course not. Docker Compose is supported too!

This makes our JSON slightly more complicated now, since we have to tell the extension something that in the previous sample was automatically configured by itself.

{

"dockerComposeFile": "docker-compose.yml",

"service": "devcontainer",

"shutdownAction": "stopCompose",

"workspaceFolder": "/workspace",

"extensions": [

"ms-dotnettools.csharp",

"editorconfig.editorconfig",

"ms-azuretools.vscode-docker"

]

}

Now the dockerFile field has been replaced by dockerComposeFile, we have to

specify which of the services defined inside the YAML is the one vscode-server

will be launched from, what to do when shutting down and most importantly which

workspace to open since now it will be our responsibility to mount the correct

folder inside the workspace.

(just a sidenote, override files are supported too)

In our case, the docker-compose.yml we’ll put inside the .devcontainer

directory will be similar to this:

version: '3'

services:

devcontainer:

build: .

environment:

- LOCALSTACK_HOST=localstack

- CONNECTIONSTRINGS__REDIS=redis

- CONNECTIONSTRINGS__POSTGRES=Host=postgres;Username=postgres;Password=MySecretPassword

volumes:

- ..:/workspace

- ./persistence/nuget:/root/.nuget

- /var/run/docker.sock:/var/run/docker.sock

command: sleep infinity

ports:

- 5000:5000

- 5001:5001

localstack:

image: localstack/localstack

environment:

- SERVICES=s3

- DATA_DIR=/tmp/localstack/data

volumes:

- ./persistence/localstack:/tmp/localstack

redis:

image: redis:5.0.6-alpine

postgres:

image: postgres:12

environment:

- POSTGRES_USER=postgres

- POSTGRES_PASSWORD=MySecretPassword

- PGDATA=/pgdata

ports:

- 5432:5432

volumes:

- ./persistence/postgres:/pgdata

There is A TON to unpack here.

For starters, we’re now defining four containers. One is the “devcontainer” we’ve worked with until now, but we’ll come back to that later.

We’ll start with the redis service, which just defines which image to use.

Since all containers are on the same virtual network, it means that if we

installed the redis-tools package inside our main container, we’ll be able to

connect to it using the redis hostname.

Then we have postgres which is a little more complicated: in addition to the

image we also define some required environment variables for the default

username and password to use. We also move the PGDATA into a different location,

that’s mounted externally in order to maintain persistence of its data.

This way, if we rebuild the container we won’t lose all the data in the

database. Its port is exposed externally, so we can use local tools to connect

to it if there’s the need.

The same thing is done with LocalStack (you can find more info about its environment variables in its own documentation), but there too we used an external volume to avoid losing data upon rebuilds.

And then we have our devcontainer. Of course this one doesn’t define an image

to use, rather builds it from our old Dockerfile.

Some of its configuration is required for everything to work, specifically the first volume that’s defined there, its purpose is to mount the real workspace inside the container, something that up until now was done automatically by the extension (it’s the same path we put in the JSON). More importantly we also have to override its command, otherwise the container would have exited immediately after starting. This is an easy way to keep it running indefinitely.

I also added a volume mounting the Docker socket: after doing this we can just install the Docker tools inside the container, allowing us to interact with our machine’s docker installation. It’s pretty useful if you don’t want to jump to a separate terminal when you need to build images for example.

Also notice the environment variables: the first one contains the hostname for the LocalStack container, which we can use at runtime on our application to detect whether we should use the real S3 or rather change the service endpoint URL to the LocalStack one.

The other two instead contain connection strings for Redis and Postgres in the format .NET Core expects which means that we can use our YAML to seamlessly override configuration keys only when running inside a devcontainer.

Conclusions

If I might seem enthusiastic about this feature it’s because I am: it opens so many scenarios and solves so many problems that it’s hard not to use it.

And this is exactly what we’re doing internally, authoring standard developer images and templates to rollout across projects.

We first tested it out in one of our .NET projects while it was still in preview and we immediately saw its advantages for making onboards easier, and how it made sharing samples and experiments much easier as part of our tech Fridays. Or it might make it simpler to setup a lab for an internal workshop.

If you want to learn even more you can head to the official docs which also describes more advanced scenarios like Docker over SSH.

This article also appeared on TUI Musement’s tech blog.